Quality Control (QC) Best Practice

| Quality Control (QC) best practices help to ensure that systems meet required standards consistently and work properly. |

Calibration Standards

| Calibration standards are crucial for ensuring accurate and reliable analytical results.

· Choose appropriate calibration standards with a known concentration of the analyte(s) that match the expected concentration range in your sample matrix. Ensure that these standards are prepared accurately and stored properly. · Use consistent and accurate parameters on the GC, for example carrier gas and temperature settings. · Inject the standard as a single point or in series to establish a calibration curve. · Run a known concentration of a QC sample to confirm the accuracy of the calibration. · Perform regular calibration checks to maintain reliability. Recalibrate the system when needed. |

Single point calibration

| Single point calibration is the least used type of calibration for standardizing a method. The result is only based on one concentration point.

A known concentration single standard is used for establishing a relationship between the response and the analyte concentration. This is effective for systems with a stable performance and linear detector responses. The response factor (RF) is determined using the formula: RF=detector response/ concentration of standard The method assumes a linear relationship between the detector response and analyte concentration, which may not be always valid. |

Multipoint calibration

| Unlike single point calibration, this method uses multiple concentrations of a standard, which is especially useful when analysing samples with varying concentration levels or when the linearity of the system needs verification. |

| The concentration range is chosen to cover the expected concentration range of in the samples or as per the industry standard method. A series of known concentrations of the analyte(s) are prepared.

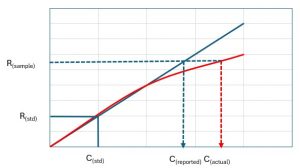

The analyte peak areas, or height, are plotted against the known concentrations which will generate a calibration curve. The injected standards will be compared to the expected values. The correlation coefficient (R2) is used to assess the linearity. If this value is close to 1 it indicates a strong linear relationship. The specification for this number is often defined by regulations. The peak areas of the unknown samples are then compared to the calibration curve to determine their concentrations. A single calibration standard is used to validate the identity of the unknown peaks based on the retention time |

QC samples

| QC samples can be used throughout an analytical run to ensure optimum performance of the instrument and sample preparation steps.

QC samples can be ran in subsequent batches without the need for a full calibration curve, which would be determined during method validation. For example: Day 1 run calibration curve and QC, day 2-4 only QC samples etc. Typically QC samples would be prepared using analytical standards independent of the calibration. QC samples may be prepared at a single or multiple concentration(s) (this may be defined by regulation). A good rule of thumb is to prepare your QC sample at the midpoint of your linearity range. Best practice would be to use spiked samples where a known concentration of the target analyte(s) is added to the sample before undergoing any preparation steps. This ensures that the whole process of analysis from sample prep through to injection and processing is within specification giving confidence in your results. This is achieved by calculating the % recovery of the target analyte(s) and comparing this to the defined pass/fail criteria (either from relevant regulation or lab SOP). For routine analysis QC samples will bracket samples throughout the analytical run i.e. QC 1, sample 1, sample 2, QC 2. This allows for each QC “bracket” that passes, we can be confident that the results from the samples in between can be trusted. The number of samples in between each bracket may be defined by regulations or by the individual labs SOP. The number of QC samples would be optimised during method validation, depending upon various factors such as levels of carry-over and sample stability.

Download Technical Note – Quality Control (QC) Best Practice |